Reducing Semantic Distortion of Multiword Expressions for Topic Modeling with Latent Dirichlet Allocation

Abstract

The Makan Bergizi Gratis (MBG) is one of the Indonesian government’s priority initiatives that has received significant coverage in online media. To understand the main themes within these narratives, this study applies topic modeling using Latent Dirichlet Allocation (LDA). However, the results of topic modeling are highly influenced by the preprocessing stage, particularly in handling multiword expressions (MWEs) such as named entities, collocations, and compound words. This study compares two preprocessing approaches: basic and extended, with the latter involving the masking of MWEs. Experimental results show that the extended preprocessing model achieved the highest coherence score of 0.5149 at K=22K = 22K=22, with four other scores also exceeding 0.496, whereas the basic preprocessing model only reached a maximum of 0.3932 at K=10K = 10K=10. Furthermore, cosine similarity scores between topics in the extended model were lower (maximum 0.7406) than in the basic model (maximum 0.8244), indicating that the topics produced were more diverse and less overlapping. These findings highlight the importance of preprocessing strategies that preserve phrase-level meaning to reduce semantic distortion and improve topic coherence and representation-particularly in analyzing media discourse on public policy programs such as MBG.

Downloads

References

E. Setyawan, Rianto, Kusuma Wardana, Sugihartanto, Rizal Angko Pratama, and Malik Ibrahim, “Analisis Wacana Berita Hoaks tentang Program Makan Bergizi Gratis (MBG) Menggunakan Pendekatan Socio-Cognitive Teun A. van Dijk,” Jurnal Audiens, vol. 6, no. 2, pp. 254–277, Jun. 2025, doi: 10.18196/jas.v6i2.607.

D. Wulandari, N. Istiqomah, T. Utami, and Y. Sunesti, “Efektivitas Pengalokasian Dana Desa Terhadap Program Percepatan Penurunan Stunting,” Jurnal Pendidikan Sejarah dan Riset Sosial Humaniora (KAGANGA), vol. 7, no. 1, 2024.

A. Santoso, B. D. Melianawati, and E. A. Ayuningtyas, “Governance Analysis Of The Implementation Of The Free Nutritious Meal Program,” Jurnal Manajemen Bisnis dan Organisasi (JMBO), vol. 4, no. 1, pp. 240–270, 2025, doi: 10.58290/jmbo.v4i1.423.

A. Albaburrahim, A. P. A. Putikadyanto, A. N. Efendi, M. A. Alatas, S. Romadhon, and L. R. Wachidah, “Program Makan Bergizi Gratis: Analisis Kritis Transformasi Pendidikan Indonesia Menuju Generasi Emas 2045,” Entita: Jurnal Pendidikan Ilmu Pengetahuan Sosial dan Ilmu-Ilmu Sosial, pp. 767–780, May 2025, doi: 10.19105/ejpis.v1i.19191.

D. K. Geeganage, Y. Xu, and Y. Li, “A Semantics-enhanced Topic Modelling Technique: Semantic-LDA,” ACM Trans Knowl Discov Data, vol. 18, no. 4, Feb. 2024, doi: 10.1145/3639409.

T. Wada, Y. Matsumoto, T. Baldwin, and J. H. Lau, “Unsupervised Paraphrasing of Multiword Expressions,” Jun. 2023, [Online]. Available: http://arxiv.org/abs/2306.01443

M. Jelita, “Text Mining dengan Topic Modelling LDA dari Pertanyaan Gelar Wicara Literasi Perpustakaan Nasional RI,” Media Pustakawan, vol. 31, no. 3, pp. 253–265, Dec. 2023, doi: 10.37014/medpus.v31i3.5237.

A. Breuer, “E-LDA: Toward Interpretable LDA Topic Models with Strong Guarantees in Logarithmic Parallel Time,” Jun. 2025, [Online]. Available: http://arxiv.org/abs/2506.07747

H. Sudarman, “Analisis dan Deteksi Kemiripan Teks Berbasis Python dengan Algoritma Levenshtein Distance,” Jurnal Riset Sistem Informasi Dan Teknik Informatika (JURASIK), vol. 10, pp. 257–273, 2025.

S. Sahoo, J.Maiti, and V.K.Tewari, “Multivariate Gaussian Topic Modelling: A novel approach to discover topics with greater semantic coherence,” 2025.

A. Amalia, O. Salim Sitompul, E. Budhiarti Nababan, and T. Mantoro, “A Comparison Study of Document Clustering Using Doc2vec Versus Tfidf Combined with Lsa for Small Corpora,” J Theor Appl Inf Technol, vol. 15, p. 17, 2020.

I. Zaitova, V. Hirak, B. M. Abdullah, D. Klakow, B. Möbius, and T. Avgustinova, “Attention on Multiword Expressions: A Multilingual Study of BERT-based Models with Regard to Idiomaticity and Microsyntax,” May 2025. [Online]. Available: http://arxiv.org/abs/2505.06062

H. Kresnawan, S. G. Felle, H. G. Mokay, and N. A. Rakhmawati, “Analyzing Main Topics Regarding the Electronic Information and Transaction Act in Instagram Using Latent Dirichlet Allocation,” Data Science: Journal of Computing and Applied Informatics, vol. 5, no. 2, pp. 71–84, Jul. 2021, doi: 10.32734/jocai.v5.i2-6125.

A. Drissi, S. Sassi, R. Chbeir, A. Tissaoui, and A. Jemai, “SemaTopic: A Framework for Semantic-Adaptive Probabilistic Topic Modeling,” Computers, vol. 14, no. 9, Sep. 2025, doi: 10.3390/computers14090400.

H. Mu, S. Zhang, and H. Xu, “A Knowledge-Driven Approach to Enhance Topic Modeling with Multi-Modal Representation Learning,” in ICMR 2024 - Proceedings of the 2024 International Conference on Multimedia Retrieval, Association for Computing Machinery, Inc, May 2024, pp. 1347–1355. doi: 10.1145/3652583.3658069.

B. Warsito, J. Endro Suseno, and A. Arifudin, “Embedding and Topic Modeling Techniques for Short Text Analysis on Social Media: A Systematic Literature Review,” Data and Metadata, vol. 4, p. 1168, Sep. 2025, doi: 10.56294/dm20251168.

J. Schneider, “Efficient and Flexible Topic Modeling Using Pretrained Embeddings and Bag of Sentences,” in International Conference on Agents and Artificial Intelligence, Science and Technology Publications, Lda, 2024, pp. 407–418. doi: 10.5220/0012404000003636.

H. Sakai and S. S. Lam, “HAMLET: Healthcare-focused Adaptive Multilingual Learning Embedding-based Topic Modeling,” 2025.

T. P. Nguyen et al., “XTRA: Cross-Lingual Topic Modeling with Topic and Representation Alignments,” Oct. 2025. [Online]. Available: http://arxiv.org/abs/2510.02788

G. Kumar Das and P. Bhattacharjee, “eLDA: Augmenting Topic Modeling with Word Embeddings for Enhanced Coherence and Interpretability,” Journal of Information Systems Engineering and Management, vol. 2025, no. 21s, pp. 2468–4376, 2024.

Y. Kustiyahningsih and Y. Permana, “Penggunaan Latent Dirichlet Allocation (LDA) dan Support-Vector Machine (SVM) Untuk Menganalisis Sentimen Berdasarkan Aspek Dalam Ulasan Aplikasi EdLink,” Teknika, vol. 13, no. 1, pp. 127–136, Mar. 2024, doi: 10.34148/teknika.v13i1.746.

A. Yaman, B. Sartono, A. M. Soleh, and I. Pertanian Bogor, “Pemodelan topik pada dokumen paten terkait pupuk di Indonesia berbasis Latent Dirichlet Allocation 1 2 3,” Berkala Ilmu Perpustakaan dan Informasi, vol. 17, no. 2, pp. 168–180, 2021, doi: 10.22146/bip.v17i1.2147.

Kristine Angelina Simanjuntak, Muhamad Koyimatu, Yolla Putri Ervanisari, and Tasmi, “Identifikasi Opini Publik Terhadap Kendaraan Listrik dari Data Komentar YouTube: Pemodelan Topik Menggunakan BERTopic,” TEMATIK, vol. 11, no. 2, pp. 195–203, Dec. 2024, doi: 10.38204/tematik.v11i2.2096.

L. Nur Halimah, S. Riyadi, A. Fatahillah Jurjani, A. Prayogi, and S. Dwi Laksana, “Implementasi Penggunaan Machine Learning Dalam Pembelajaran: Suatu Telaah Deskriptif,” Journal Penelitian Pendidikan, vol. 1, no. 1, 2025.

D. Mubarok et al., “Big Data Analytics Dan Machine Learning Untuk Memprediksi Perilaku Konsumen Di E-Commerce,” JIRE (Jurnal Informatika & Rekayasa Elektronika), vol. 8, no. 1, 2025.

Abstract views: 406 times

Abstract views: 406 times Download PDF: 151 times

Download PDF: 151 times

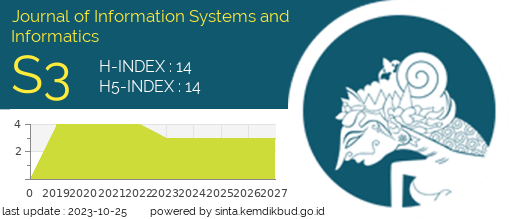

Copyright (c) 2025 Journal of Information Systems and Informatics

This work is licensed under a Creative Commons Attribution 4.0 International License.

- I certify that I have read, understand and agreed to the Journal of Information Systems and Informatics (Journal-ISI) submission guidelines, policies and submission declaration. Submission already using the provided template.

- I certify that all authors have approved the publication of this and there is no conflict of interest.

- I confirm that the manuscript is the authors' original work and the manuscript has not received prior publication and is not under consideration for publication elsewhere and has not been previously published.

- I confirm that all authors listed on the title page have contributed significantly to the work, have read the manuscript, attest to the validity and legitimacy of the data and its interpretation, and agree to its submission.

- I confirm that the paper now submitted is not copied or plagiarized version of some other published work.

- I declare that I shall not submit the paper for publication in any other Journal or Magazine till the decision is made by journal editors.

- If the paper is finally accepted by the journal for publication, I confirm that I will either publish the paper immediately or withdraw it according to withdrawal policies

- I Agree that the paper published by this journal, I transfer copyright or assign exclusive rights to the publisher (including commercial rights)